How to Connect Directly to your GCP VPC

This tutorial empowers you to deploy an n2x-node within an Google Cloud VPC (Virtual Private Cloud) and configure it to directly access existing VM instances in a private subnet. Streamline your workflow and prioritize security by eliminating the need for a bastion host and enabling direct, encrypted private access from any node in the n2x.io network topology.

Here is the high-level overview of tutorial setup architecture:

Note

This guide demonstrates how to deploy a single n2x-node within a single availability zone. To achieve high availability failover, multiple n2x-nodes can be deployed across multiple availability zones and configured to export the same routes.

In our setup, we will be using the following components:

-

Google Cloud VPC (Virtual Private Cloud) provides networking for your cloud-based services that is global, scalable, and flexible. For more info please visit the GCP Documentation

-

n2x-node is an open-source agent that runs on the machines you want to connect to your n2x.io network topology. For more info please visit n2x.io Documentation.

Before you begin

In order to complete this tutorial, you must meet the following requirements:

-

An GCP account.

-

The Compute Engine API should be enabled.

-

A n2x.io account and one subnet with

10.254.1.0/24prefix.

Note

Please note that this tutorial uses a Linux OS with an Ubuntu 24.04 (Noble Numbat) with amd64 architecture.

Step-by-step Guide

Step 1 - Deploying a n2x-node in GCP

Follow this step-by-step guide to set up an n2x-node in GCP. This guide will walk you through creating all the necessary infrastructure within the cloud service provider, including a VPC, public subnet, firewall rules and instance.

Step 2 - Configuring n2x-node instance to export VPC CIDR

Before configuring n2x-node-01 to export VPC CIDR, we need to enable IPv4 forwarding on both the Linux OS and the Google Cloud VM Instance. Here's how to do it:

Now, to make the VPC CIDR available on your n2x.io subnet we need to configure the n2x-node-01 to export this CIDR. For this, we need to edit /etc/n2x/n2x-node.yml and add the following configuration:

# network routes behind this node (optional)

routes:

export:

- <VPC CIDR>

import:

Info

Replace <VPC CIDR> with the VPC CIDR value, in this case is 10.0.1.0/24.

Restart the n2x-node service for this change to take effect:

sudo systemctl restart n2x-node

Step 3 - Deploying Private Google Cloud VM Instances

Before creating the Google Cloud VM Instances we need to create a private subnet and some additional resources:

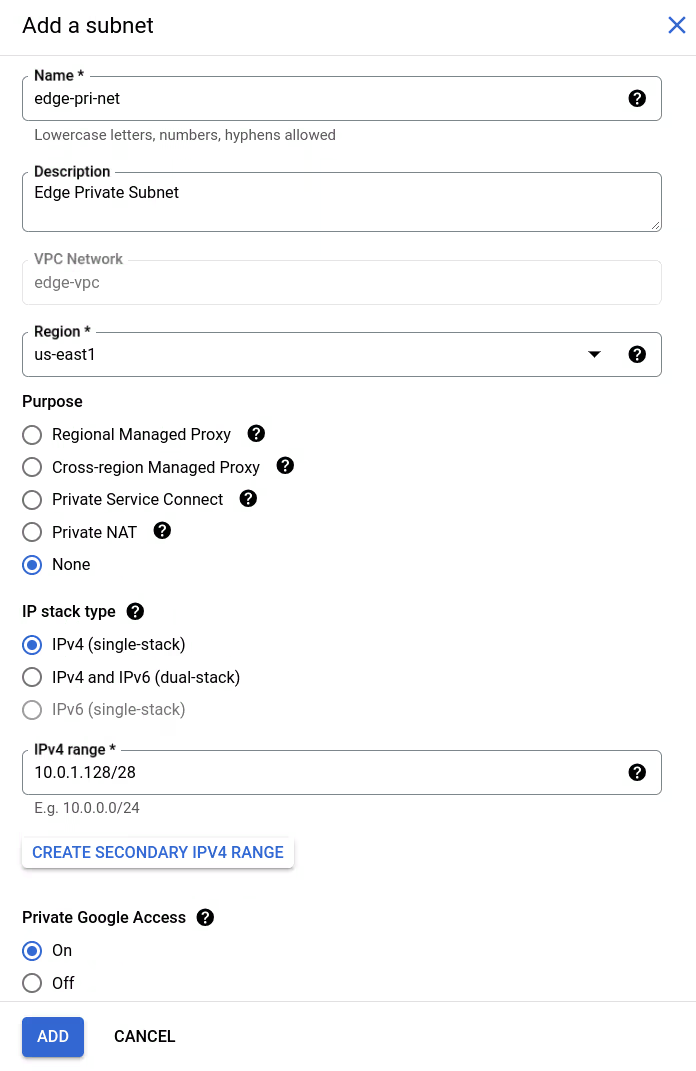

-

Create a private subnet in the

edge-vpcas described in the following table. For more information, see Subnets:Subnet Settings

Name Description Region IPv4 range Private Google Access edge-pri-net Edge Private Subnet us-east1 10.0.1.128/28 On

-

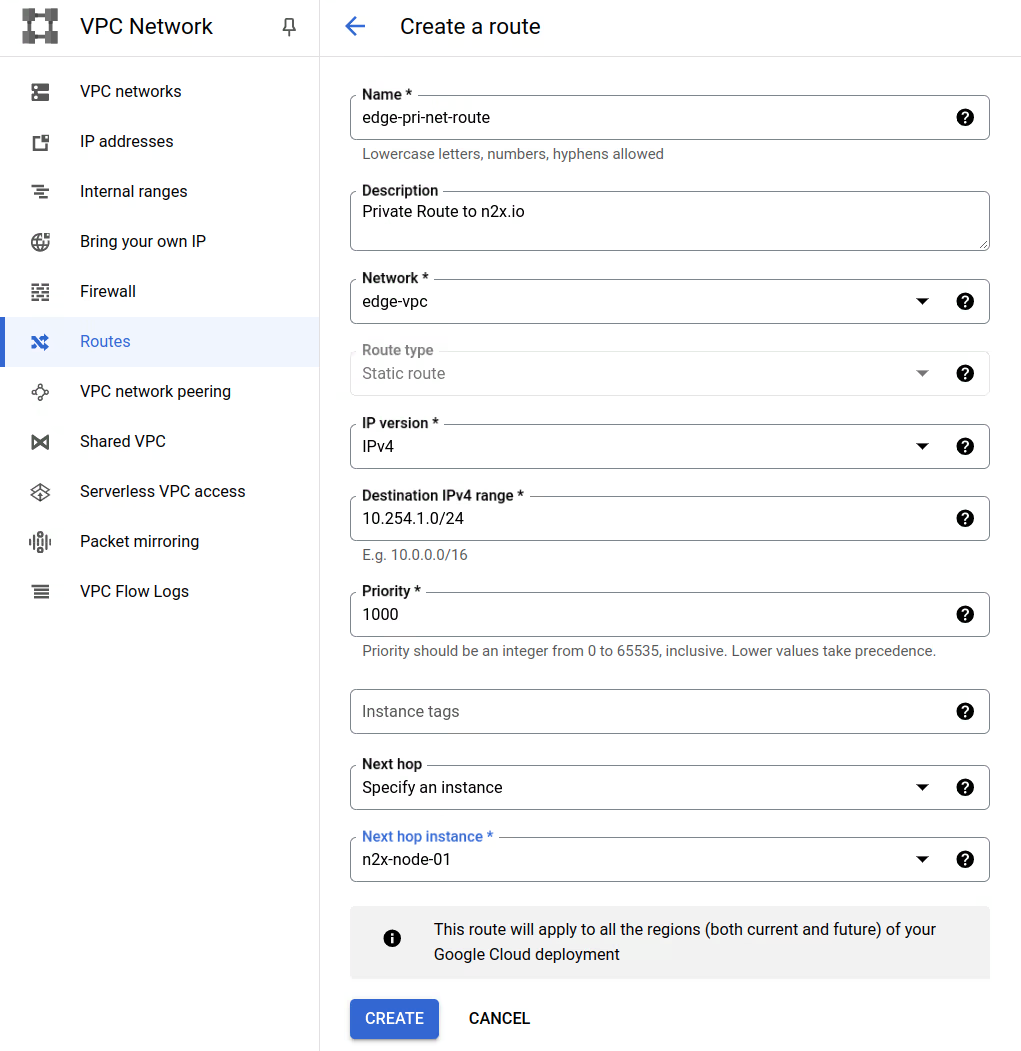

Create a route in the

edge-vpcwith the destination10.254.1.0/24(n2x.io subnet CIDR) to be routed through then2x-node-01. For more information, see Routing in Google Cloud.Route Settings

Name Network Destination Instance Tags Next Hop Instance edge-pri-net-route edge-vpc 10.254.1.0/24 app-instances n2x-node-01

-

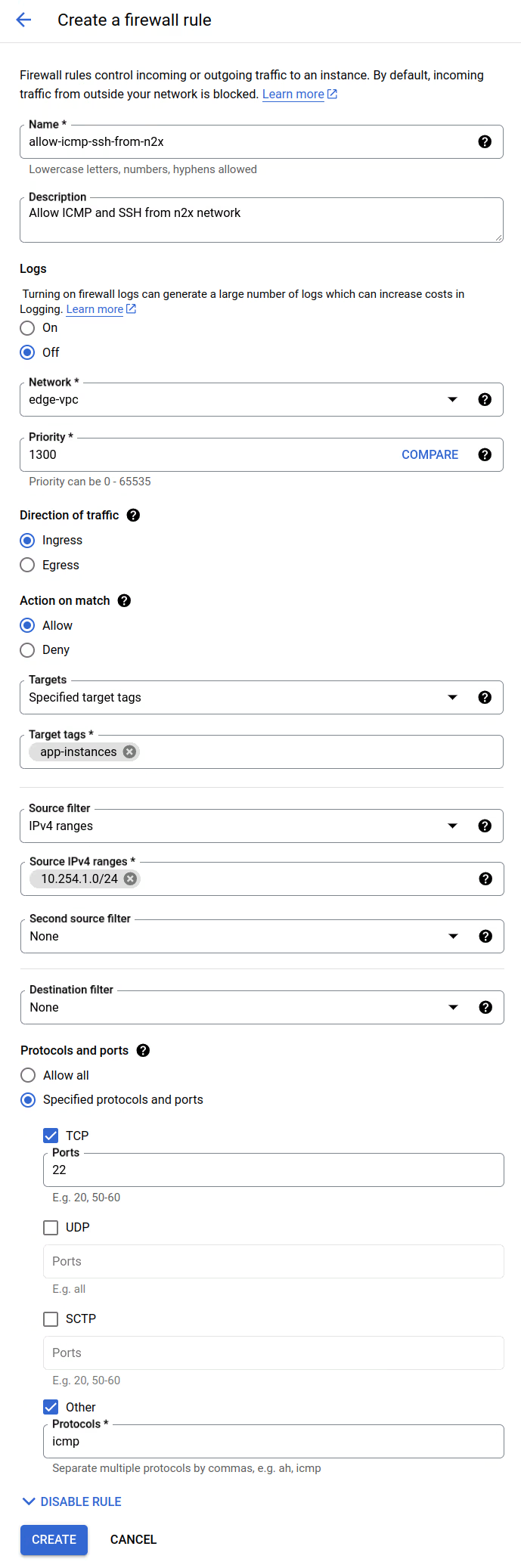

Now let's create a firewall rule to allow ICMP and SSH access from the n2x.io subnet (

10.254.1.0/24). In theedge-vpc, click on theFirewallstab and then click on theAdd Firewall Rulebutton. For more information, see FirewallsFirewall Rule Settings

Name Network Priority Direction of traffic Action on match Target tags Source IPv4 Ranges Protocols and Ports allow-icmp-ssh-from-n2x edge-vpc 1300 Ingress Allow app-instances 10.254.1.0/24 TCP:22 ICMP

-

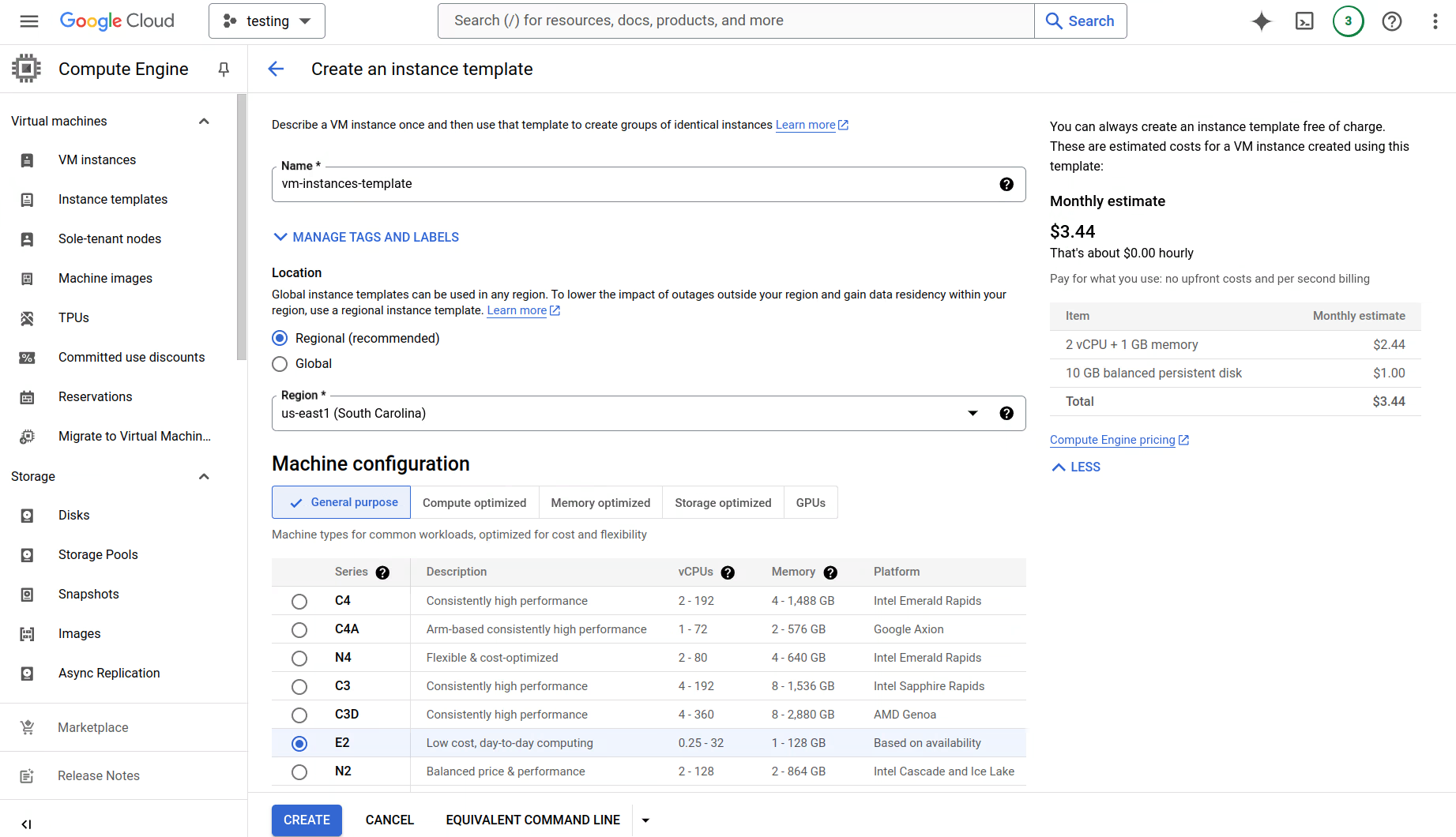

Create a instance template named

vm-instances-template, we'll use this template to configure our instance groups. For more information, see Create instance templates:Instance Template Settings

Region OS Version Network Tag Network Subnetwork External IPv4 address us-east1 Ubuntu Ubuntu 24.04 LTS app-instances edge-vpc edge-pri-net None

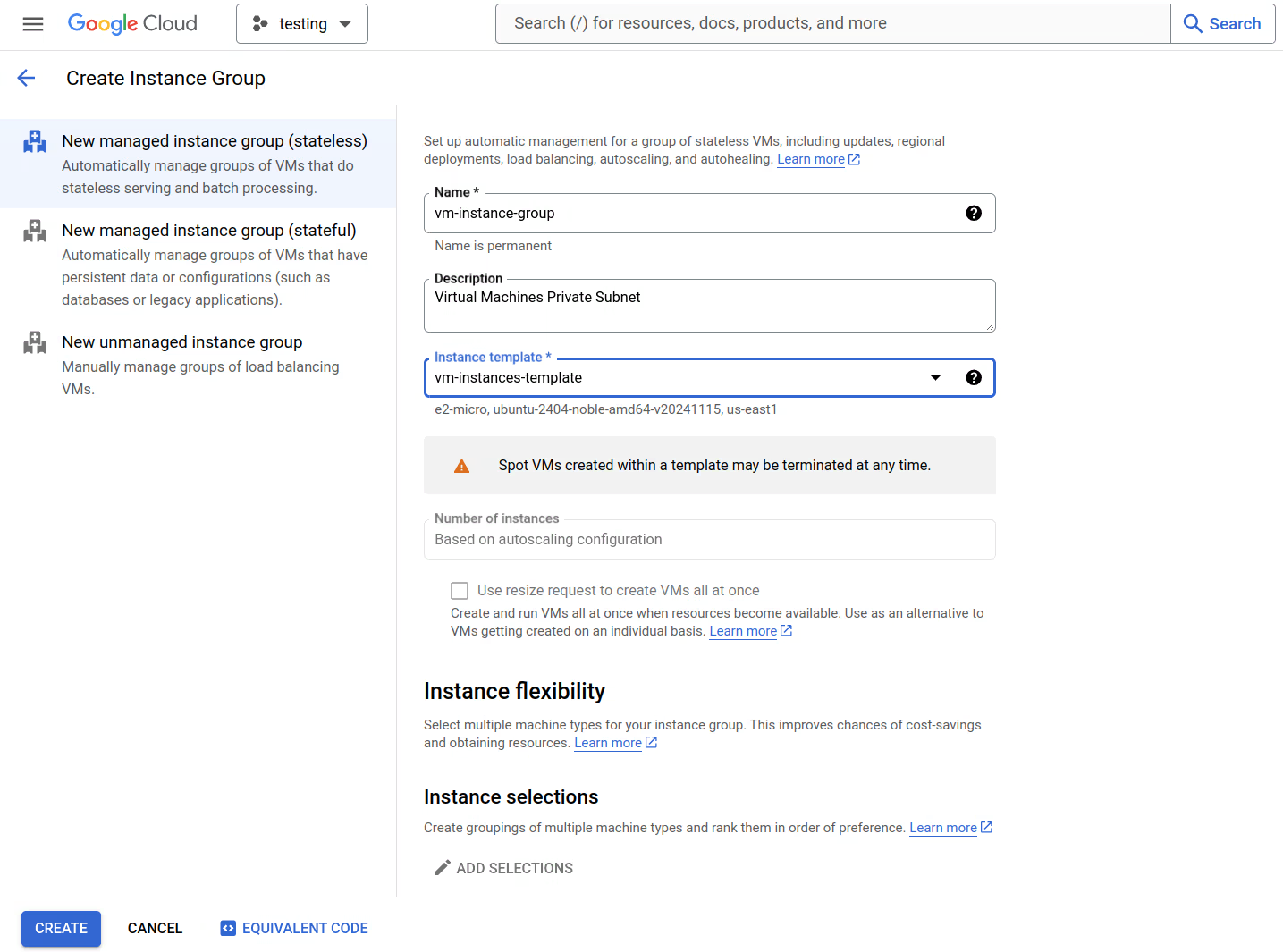

Now, we can create an Managed instance group (MIG) named vm-instance-group to automatically adjust the number of Google Cloud VM Instances based on demand.

The key configuration step for the Managed Instance Group (MIG) is to associate it with the previously created instance template named vm-instances-template. Refer to Creating managed instance groups (MIGs) for more details.

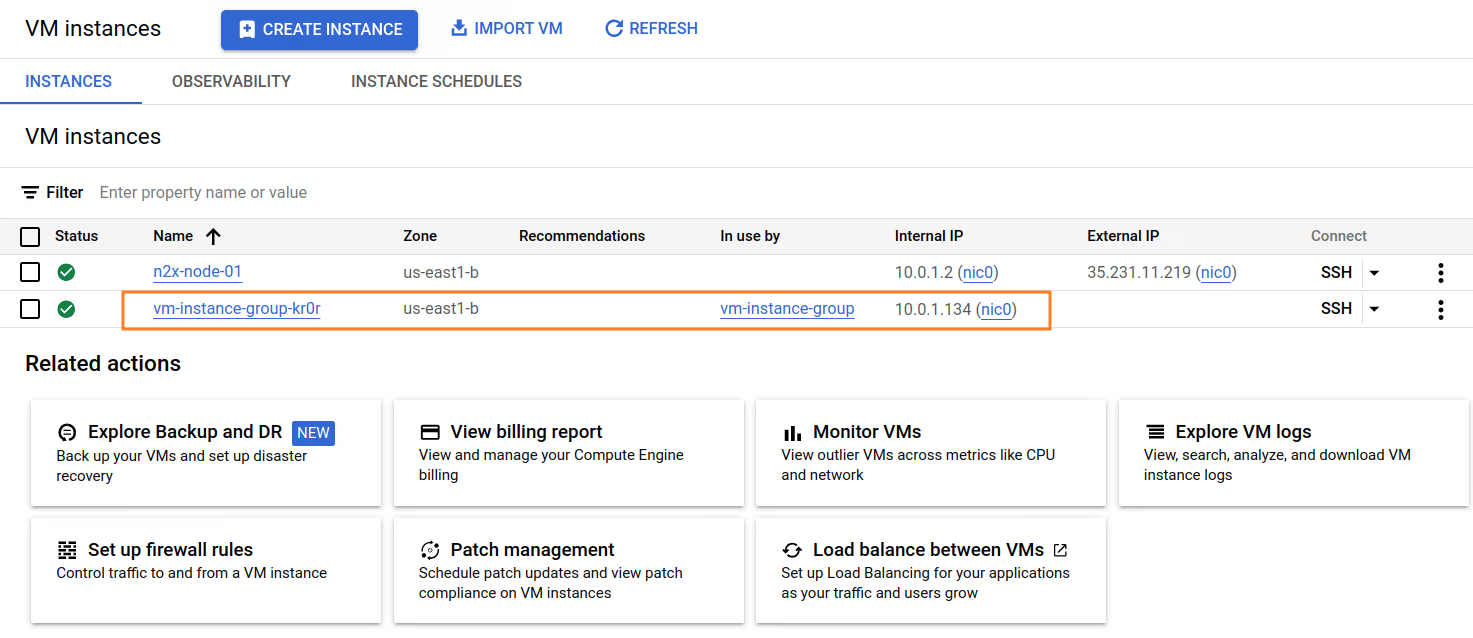

Once the managed instance group is configured, a new Google Cloud VM Instance will be deployed in the private subnet.

Note

The newly deployed Google Cloud VM Instance will be assigned a private IP address, such as 10.0.1.134 in this example. We'll use this private IP address to connect from server-01 during the validation step.

Step 4 - Connecting the server-01 to our n2x.io network topology

Now we need to connect the server-01 must be able to access Google Cloud VM Instances.

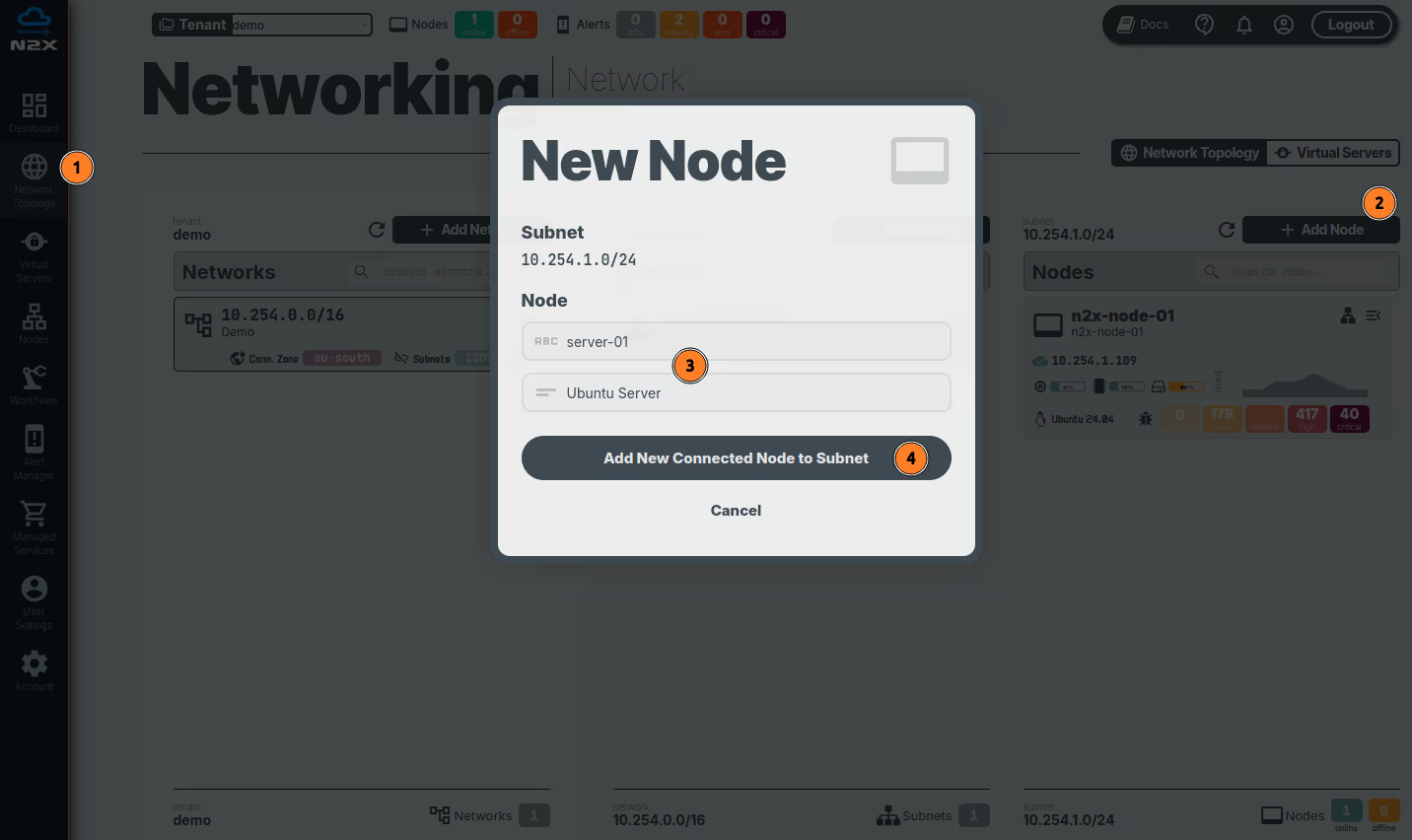

Adding a new node in a subnet with n2x.io is very easy. Here's how:

- Head over to the n2x WebUI and navigate to the

Network Topologysection in the left panel. - Click the

Add Nodebutton and ensure the new node is placed in the same subnet as then2x-node-01. - Assign a

nameanddescriptionfor the new node. - Click

Add New Connected Node to Subnet.

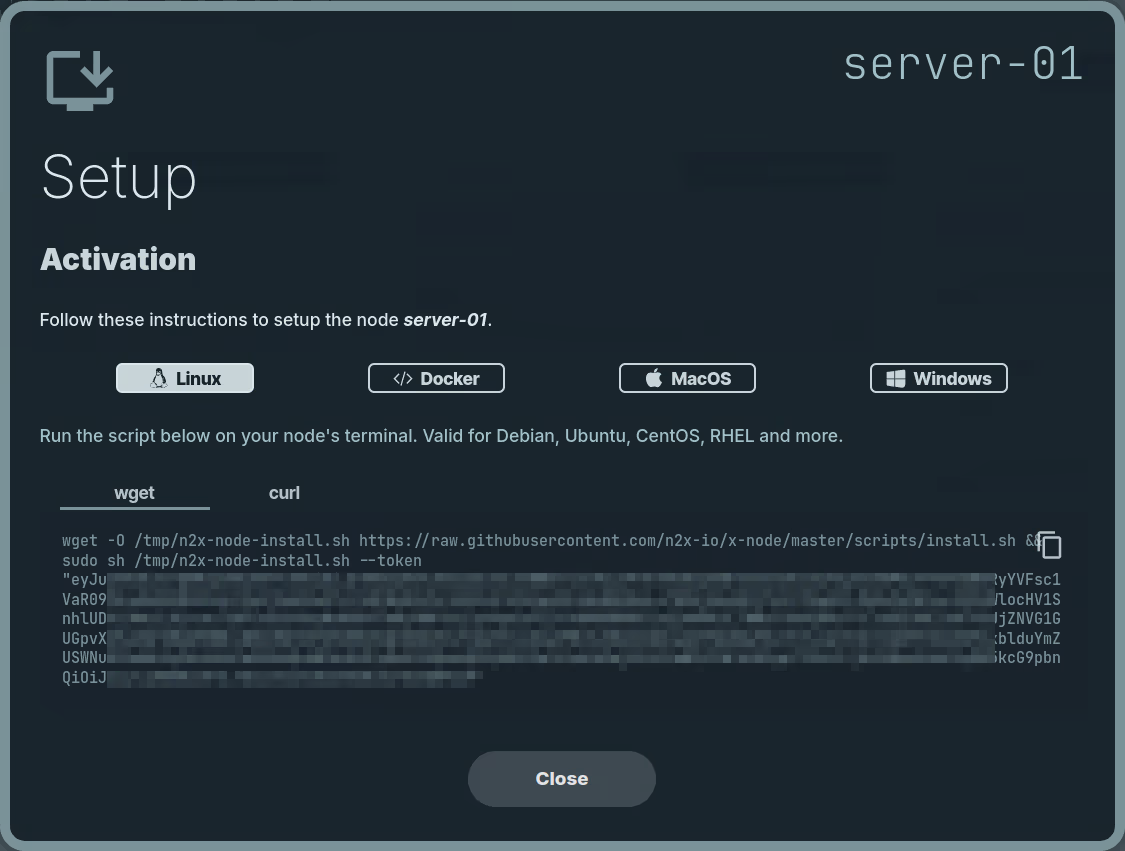

Here, we can select the environment where we are going to install the n2x-node agent. In this case, we are going to use Linux:

Run the script on server-01 terminal and check if the service is running with the command:

systemctl status n2x-node

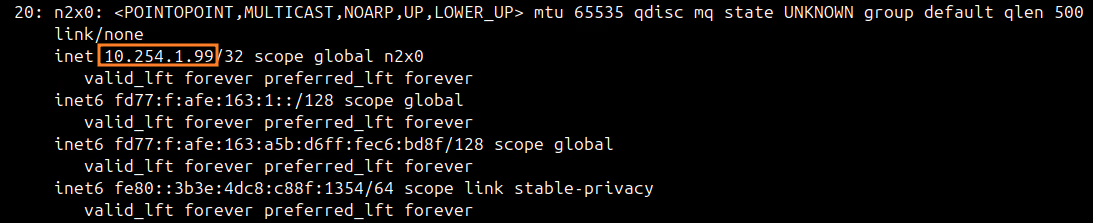

You can use ip addr show dev n2x0 command on server-01 to check the IP assigned to this node:

At this point, we need to make the VPC CIDR available in our server-01 importing this CIDR. For this, we need to edit /etc/n2x/n2x-node.yml in server-01 and add the following configuration:

# network routes behind this node (optional)

routes:

export:

-

import:

- <VPC CIDR>

Info

Replace <VPC CIDR> with the VPC CIDR value, in this case is 10.0.1.0/24.

Restart the n2x-node service for this change to take effect:

systemctl restart n2x-node

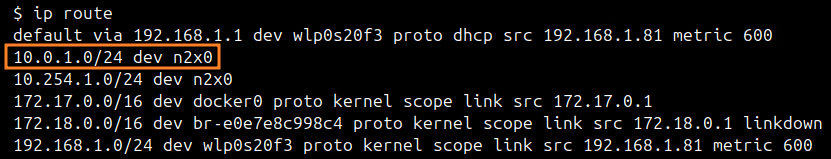

We can check the local routing table in server-01 with the following command:

ip route

Step 5 - Verify your Connection

Once you've completed all configuration steps, you should be able to ping or connect via SSH to any Google Cloud VM Instance in the Google Cloud VPC from any device connected to the n2x.io subnet.

We'll now verify connectivity to the Google Cloud VM Instance from server-01 device. In this scenario, the Google Cloud VM Instance has been assigned the private IP address 10.0.1.134.

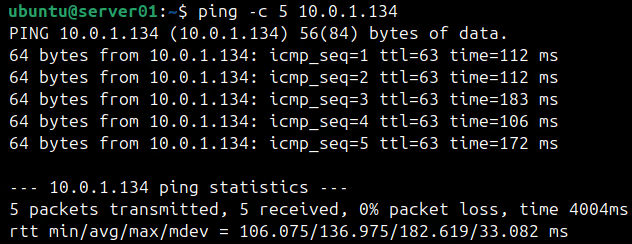

Ping Test

Let's first check if you can ping the Google Cloud VM Instance from server-01. Here's the command:

ping -c 5 10.0.1.134

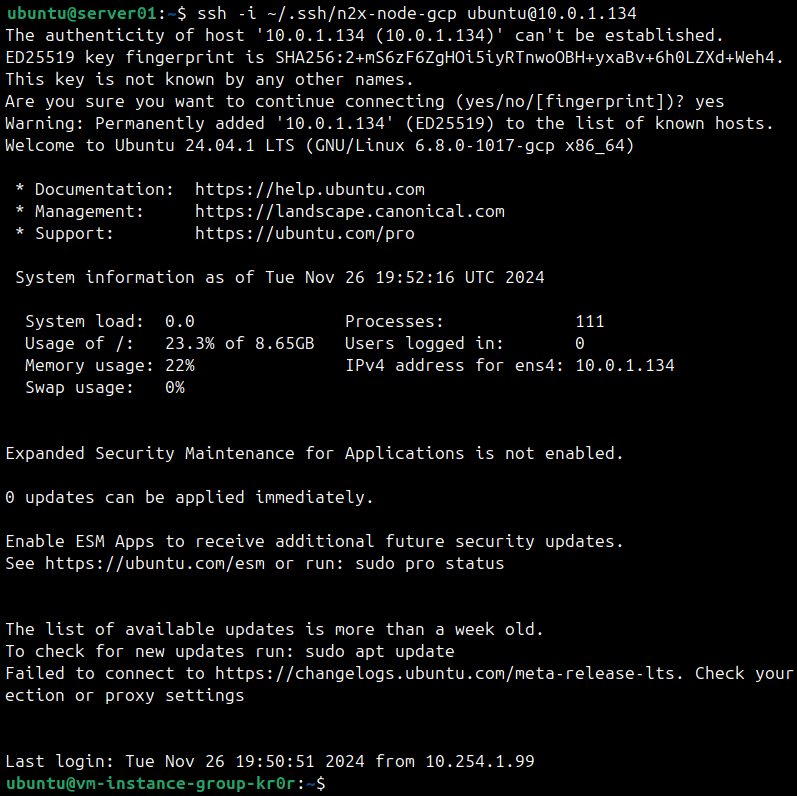

SSH Connection

Now we can attempt an SSH connection to the Google Cloud VM Instance from server-01:

ssh -i ~/.ssh/n2x-node-gcp [email protected]

Success

That's it! The Google Cloud VPC is now accessible from any device connected to your n2x.io virtual private network.

Info

For even more granular control over traffic flow across your n2x.io network topology, explore n2x.io's security policies.

Conclusion

This article guided you through establishing a direct, secure connection between your n2x.io network topology and your Google Cloud VPC.

This setup offers a secure, cost-effective, and streamlined approach to accessing your Google Cloud VPC resources from your n2x.io network.