Build a Multi-Cloud Object Storage with Minio Operator

In the era of data-driven decision-making and cloud-native applications, efficient and scalable storage solutions are essentials. Multi-cloud storage follows the model established in the public cloud, and public cloud providers have unanimously adopted cloud-native object storage.

One such solution is MinIO, an open-source, high-performance, and Amazon S3-compatible object storage system. MinIO allows organizations to build their private cloud storage infrastructure, offering the scalability and flexibility needed to handle large amounts of data.

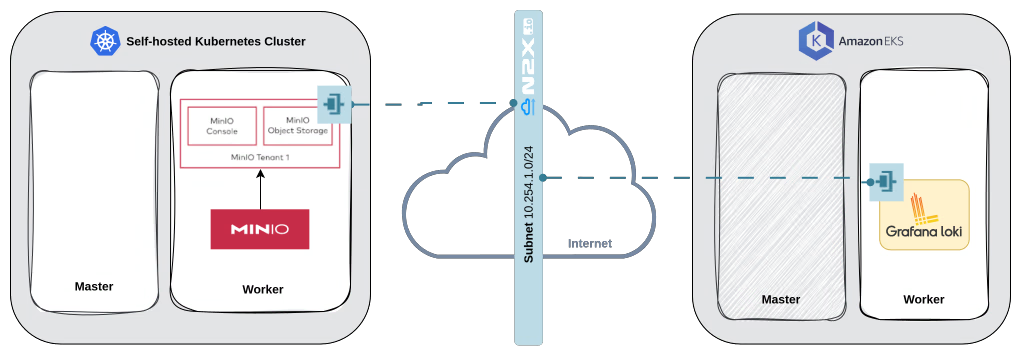

In this tutorial, we will deploy a MinIO Operator in a self-hosted Kubernetes cluster. This will allow us to create a MinIO Tenant with a private Object Storage where we can create some buckets. Also, we will deploy Grafana Loki in an EKS cluster and configure it to use the buckets created before in MinIO Object Storage. Finally, we will create a network topology using n2x.io that allows communications between Grafana Loki and MinIO Object Storage securely.

Here is the high-level overview of tutorial setup architecture:

In our setup, we will be using the following components:

- MinIO is a high-performance, S3-compatible object store. It is built for large-scale AI/ML, data lake, and database workloads. It is software-defined and runs on any cloud or on-premises infrastructure. For more info please visit the MinIO Documentation

-

Grafana Loki is a log aggregation system designed to store and query logs from all your applications and infrastructure. For more info please visit Grafana Loki Documentation.

-

n2x-node is an open-source agent that runs on the devices or applications you want to connect to the n2x.io platform. For more info please visit n2x.io Documentation.

Before you begin

In order to complete this tutorial, you must meet the following requirements:

- Access two Kubernetes clusters (Self-hosted and EKS), version

v1.27.xor greater. - A n2x.io account created and one subnet with

10.254.1.0/24prefix. - Installed n2xctl command-line tool, version

v0.0.3or greater. - Installed kubectl command-line tool, version

v1.27.xor greater. - Installed helm command-line tool, version

v3.10.1or greater.

Note

Please note that this tutorial uses a Linux OS with an Ubuntu 22.04 (Jammy Jellyfish) with amd64 architecture.

Step-by-step Guide

Step 1: Install the MinIO Operator in a self-hosted Kubernetes cluster

Setting your context to self-hosted Kubernetes cluster:

kubectl config use-context self-hosted

MinIO requires a storage class that supports dynamic provisioning. Since this tutorial is based on demo scope, we will deploy the Local Path Provisioner.

If you deploy MinIO on a multi-node cluster, you may consider an overlay storage layer such as Longhorn or Portworx. When running on bare metal servers with high-performance SSD and NVMe disks, MinIO recommends using its own CSI driver called DirectCSI.

We can deploy the Local Path Provisioner by executing the following command:

kubectl apply -f https://raw.githubusercontent.com/rancher/local-path-provisioner/master/deploy/local-path-storage.yaml

This creates a new storage class that the MinIO operator can use.

$ kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

local-path rancher.io/local-path Delete WaitForFirstConsumer false 6m16s

We are now ready to install the MinIO operator on a self-hosted Kubernetes cluster using the official Helm chart:

-

First, let’s add the following Helm repo:

helm repo add minio-operator https://operator.min.io -

Update all the repositories to ensure helm is aware of the latest versions:

helm repo update -

Run the

helm installcommand to install the Operator inminio-operatornamespace:helm install operator minio-operator/operator -n minio-operator --create-namespace --version v5.0.12 -

Once it is done, we can verify if all of the pods in the

minio-operatornamespace are up and running:kubectl -n minio-operator get podNAME READY STATUS RESTARTS AGE console-6c96c79d49-sg6b4 1/1 Running 0 66s minio-operator-67d44f65f5-cf8rl 1/1 Running 0 66s minio-operator-67d44f65f5-lv22h 1/1 Running 0 66s -

Retrieve the Console Access Token

The MinIO Operator uses a JSON Web Token (JWT) saved as a Kubernetes Secret for controlling access to the Operator Console.

Use the following command to retrieve the JWT for login. You must have permission within the Kubernetes cluster to read secrets:

kubectl -n minio-operator get secret console-sa-secret -o jsonpath="{.data.token}" | base64 -d && echoWarning

If the output includes a trailing

%make sure to omit it from the result.

Step 2: Deploy a Tenant using the MinIO Operator Console

The MinIO Operator Console is designed to deploy multi-node distributed MinIO Deployments. For this use case, we will create a minio-tenant-1 tenant where later we will create the buckets for the Grafana Loki backend.

-

MinIO supports no more than one MinIO tenant per namespace. So we are going to create a new namespace for the MinIO Tenant:

kubectl create namespace minio-tenant-1 -

Access the Operator Console:

We can port-forward the

consoleservice and access the MinIO Operator Console directly fromhttp://localhost:9090/:kubectl port-forward svc/console -n minio-operator 9090:9090Tip

Once you access the Console, use the

Console JWTobtained in the previous step to log in.

-

Build the Tenant Configuration:

Click

Create Tenant +to open the Tenant Creation workflow:

Configure the tenant with the following requirements and click

Createto create the new tenant:

About

Setupvalues:- Storage Class: Specify the Kubernetes Storage Class the Operator uses when generating Persistent Volume Claims for the Tenant. We will select the

local-pathstorage class deployed previously. - Number of Servers: Ensure that this value does not exceed the number of available worker nodes in the Kubernetes cluster.

- Drives per Servers: The number of volumes in the new MinIO Tenant Pool. MinIO generates one Persistent Volume Claim (PVC) for each volume.

Info

You can get more detailed information about the setup here

The Operator Console displays credentials for connecting to the MinIO Tenant. You must download and secure these credentials at this stage. You cannot trivially retrieve these credentials later.

- Storage Class: Specify the Kubernetes Storage Class the Operator uses when generating Persistent Volume Claims for the Tenant. We will select the

-

Connect to the Tenant:

Use the following command to list the services created by the MinIO Operator in

minio-tenant-1namespace:kubectl -n minio-tenant-1 get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE minio LoadBalancer 10.96.2.76 <pending> 80:32688/TCP 38s minio-tenant-1-console LoadBalancer 10.96.33.110 <pending> 9090:32496/TCP 38s minio-tenant-1-hl ClusterIP None <none> 9000/TCP 38sInfo

- Applications internal to the Kubernetes cluster should use the

minioservice for performing object storage operations on the Tenant. - Administrators of the Tenant should use the

minio-tenant-1-consoleservice to access the MinIO Console and manage the Tenant, such as provisioning users, groups, buckets and policies for the Tenant. - For applications external to the Kubernetes cluster, MinIO recommends you configure Ingress or a Load Balancer to expose the MinIO Tenant services. But in this scenario, we are going to use a safer alternative. We are going to use n2x.io to create a network topology that allows us to connect remote services to the object storage to avoid exposing the endpoint publicly.

- Applications internal to the Kubernetes cluster should use the

Step 3: Create the Grafana Loki buckets in the MinIO Tenant

We need to connect to MinIO Console of minio-tenant-1-console Tenant to create the buckets used by Grafana Loki as backend.

So we can port forward the minio-tenant-1-console service and access the MinIO Tenant Console directly from http://localhost:9091/:

kubectl port-forward svc/minio-tenant-1-console -n minio-tenant-1 9091:9090

Tip

Once you access the Console, use the Access Key and Secret Key generated automatically when MinIO Tenant was created to log in.

Now select Create a Bucket to create a new bucket on the deployment. We need to repeat this step 3 times to create 3 buckets with the following names: loki-chunks, loki-ruler and loki-admin.

Step 4: Connect minio-tenant-1 object storage to our n2x.io network topology

We need to connect minio-tenant-1 object storage to our n2x.io network topology so that it is accessible to external applications outside the Kubernetes cluster.

To connect a new k8s service to the n2x.io subnet, you can execute the following command:

n2xctl k8s svc connect

The command will typically prompt you to select the Tenant, Network, and Subnet from your available n2x.io topology options. Then, you can choose the service you want to connect by selecting it with the space key and pressing enter. In this case, we will select minio-tenant-1: minio.

Note

The first time that you connect a k8s svc to the subnet, you need to deploy a n2x.io Kubernetes Gateway.

Finding IP address assigned to the MinIO Service:

-

Access the n2x.io WebUI and log in.

-

In the left menu, click on the

Network Topologysection and choose thesubnetassociated with your MinIO service (e.g., subnet-10-254-0). -

Click on the

IPAMsection. Here, you'll see both IPv4 and IPv6 addresses assigned to theminio.minio-tenant-1.n2x.localendpoint. Identify the IP address you need for your specific use case.

Info

Remember the IP address assigned to

minio.minio-tenant-1.n2x.localendpoint, we must be used to connect to the Object Storage after.

Step 5: Install Grafana Loki stack in EKS cluster

Setting your context to EKS cluster:

kubectl config use-context eks-cluster

We are going to deploy a Grafana-Loki in a scalable mode, highly available architecture designed to work with S3 object storage, on an EKS cluster using Helm:

-

Add Grafana’s chart repository to Helm:

helm repo add grafana https://grafana.github.io/helm-charts -

Update the chart repository:

helm repo update -

Create the configuration file

values.yamlwith this information:loki: commonConfig: replication_factor: 1 auth_enabled: false storage: bucketNames: chunks: loki-chunks ruler: loki-ruler admin: loki-admin type: s3 s3: endpoint: http://10.254.1.29 secretAccessKey: <Minio secret access key> accessKeyId: <Minio access key ID> s3ForcePathStyle: true insecure: true write: replicas: 1 read: replicas: 1 backend: replicas: 1The

endpointvariable should have the n2x.io IP address (in this example:10.254.1.29) assigned to the MinIO Service installed previously. ThesecretAccessKeyandaccessKeyIdvariables should have the credentials generated automatically when the MinIO Tenant was created. -

Deploy with the defined configuration:

helm install --values values.yaml loki grafana/loki -n grafana-loki --create-namespace --version 5.43.3 -

Once it is done, we can verify if all of the pods in the

grafana-lokinamespace are up and running:kubectl -n grafana-loki get podNAME READY STATUS RESTARTS AGE loki-backend-0 2/2 Running 0 87s loki-canary-26mvx 1/1 Running 0 88s loki-gateway-65cfb65c68-j55vw 1/1 Running 0 88s loki-grafana-agent-operator-d7c684bf9-xbsnm 1/1 Running 0 88s loki-logs-pc8fw 2/2 Running 0 73s loki-read-6dbf89cd59-frrd8 1/1 Running 0 88s loki-write-0 1/1 Running 0 87s

Step 6: Connect Grafana Loki to our n2x.io network topology

We need to connect Grafana Loki (loki-backend, loki-write and loki-read components) to our n2x.io network topology to be able to use minio-tenant-1 object storage and the buckets created.

To connect a new kubernetes workloads to the n2x.io subnet, you can execute the following command:

n2xctl k8s workload connect

The command will typically prompt you to select the Tenant, Network, and Subnet from your available n2x.io topology options. Then, you can choose the workloads you want to connect by selecting it with the space key and pressing enter. In this case, we will select grafana-loki: loki-backend, grafana-loki: loki-write and grafana-loki: loki-read.

Now we can access the n2x.io WebUI to verify that the Kubernetes workloads are correctly connected to the subnet:

Step 7: Check Grafana Loki can use the buckets

Log collection and queries of data stored in Grafana Loki are outside the scope of this tutorial. We recommend you read the article Collecting logs with Grafana Loki across multiple sites if you are interested in how to collect and query logs from Grafana Loki.

The goal is to show how an application like Grafana Loki can make use of buckets from an Object Storage deployed with the MinIO Operator. So we are going to the MinIO Tenant Console and check if the buckets have data:

We're done!

We already have Grafana Loki using the buckets created in MinIO Object Storage.

Conclusion

MinIO is a powerful and versatile object storage solution that can be used to store, manage, and retrieve large amounts of data. It is easy to use, highly scalable and can be integrated with a variety of other tools and technologies to create a robust and efficient data storage system.

In the article, we've learned how to build a Multi-Cloud Object Storage with the MinIO Operator and how n2x.io can help us create a unified and agnostic Object Storage solution, that offers compatibility across various environments and platforms while avoiding vendor lock-in.